Implement inceptionV3 model in UIKit project with swift

Using inceptionV3 model we will detect some objects & also use for verify this object.

Step 1: First of all, go to the XCode & create new project. Then download inceptionV3 model from this below link:

https://docs-assets.developer.apple.com/coreml/models/Inceptionv3.mlmodel

You can find more model this link https://developer.apple.com/machine-learning/build-run-models/

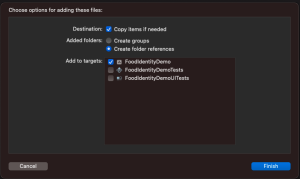

Step 2: Now insert inceptionV3 model in your project. Be aware so that your model target in your project.

Step 3: Now we will design our demo app. we will add button which take photo and add another image view where we will show our image.

class ViewController: UIViewController {

var cameraButton = UIButton(frame: CGRect(x: 200, y: 100, width: 80, height: 50))

var imageView = UIImageView(frame: CGRect(x: 10, y: 350, width: 400, height: 400))

override func viewDidLoad() {

super.viewDidLoad()

uiSetup()

}

private func uiSetup() {

self.view.addSubview(cameraButton)

cameraButton.backgroundColor = .red

cameraButton.setTitle("Camera", for: .normal)

cameraButton.addTarget(self, action: #selector(cameraButtonTapped), for: .touchUpInside)

self.view.addSubview(imageView)

imageView.backgroundColor = .red

}

}Step 4: It’s time to show camera. When user click camera button then camera will be open & user can capture picture. Add two delegate in ViewController class (UIImagePickerControllerDelegate, UINavigationControllerDelegate). Set the code as below:

class ViewController: UIViewController, UIImagePickerControllerDelegate, UINavigationControllerDelegate {

var cameraButton = UIButton(frame: CGRect(x: 200, y: 100, width: 80, height: 50))

var imageView = UIImageView(frame: CGRect(x: 10, y: 350, width: 400, height: 400))

let imagePicker = UIImagePickerController()

override func viewDidLoad() {

super.viewDidLoad()

imagePicker.delegate = self

imagePicker.sourceType = .camera

imagePicker.allowsEditing = false

uiSetup()

}

private func uiSetup() {

self.view.addSubview(cameraButton)

cameraButton.backgroundColor = .red

cameraButton.setTitle("Camera", for: .normal)

cameraButton.addTarget(self, action: #selector(cameraButtonTapped), for: .touchUpInside)

self.view.addSubview(imageView)

imageView.backgroundColor = .red

}

@objc func cameraButtonTapped() {

print("✅ cameraButtonTapped")

present(imagePicker, animated: true, completion: nil)

}

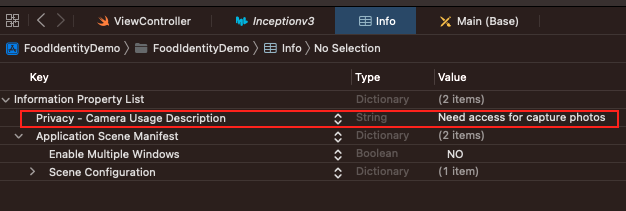

}After click Camera button Now you will get error. Because you didn’t set privacy. you should set privacy in info.Plist file.

Step 4: Before working with model first of all we should pick up photo and store in imageView. now implement below function:

func imagePickerController(_ picker: UIImagePickerController, didFinishPickingMediaWithInfo info: [UIImagePickerController.InfoKey : Any]) {

if let userPickedImage = info[UIImagePickerController.InfoKey.originalImage] as? UIImage {

imageView.image = userPickedImage

//convert ciimage for model

guard let ciimage = CIImage(image: userPickedImage) else {

fatalError("Could not convert UIimage into CIImage")

}

}

imagePicker.dismiss(animated: true)

}Step 5: Now import Vision & CoreML for working with model. Now we will write a function where we send photos and after classification it detect our photos and also tell which is it.

func detect(image: CIImage) {

guard let model = try? VNCoreMLModel(for: Inceptionv3().model) else {

fatalError("Loading CoreML Model Failed")

}

let request = VNCoreMLRequest(model: model) { request, error in

guard let results = request.results as? [VNClassificationObservation] else {

fatalError("Model failed to process image")

}

print(results)

if let firstResult = results.first {

if firstResult.identifier.contains("banana") {

print("✅ This is banana")

}else{

print("🥹 This is not banana")

}

}

}

let handler = VNImageRequestHandler(ciImage: image)

do {

try handler.perform([request])

}

catch{

print(error)

}

}Github source code: https://github.com/Joynal279/Object-Detect-ML-model

Im not that much of a online reader to be honest but your blogs really nice, keep it up! I’ll go ahead and bookmark your site to come back down the road. Cheers

What’s up it’s me, I am also visiting this web site daily, this web site is really good and the users are really sharing pleasant thoughts.

медицинская справка 2023

Hi there! I could have sworn I’ve been to this web site before but after browsing through some of the posts I realized it’s new to me. Anyways, I’m definitely happy I came across it and I’ll be bookmarking it and checking back frequently!

Nice post. I used to be checking continuously this blog and I am inspired! Very useful information specially the remaining phase 🙂 I take care of such info a lot. I used to be seeking this particular info for a long timelong time. Thank you and good luck.

Whoa! This blog looks exactly like my old one! It’s on a entirely different topic but it has pretty much the same layout and design. Excellent choice of colors!

My relatives always say that I am wasting my time here at net, but I know I am getting knowledge everyday by reading such pleasant posts.

Pretty section of content. I just stumbled upon your website and in accession capital to assert that I acquire in fact enjoyed account your blog posts. Any way I’ll be subscribing to your augment and even I achievement you access consistently fast.

What’s up, after reading this remarkable article i am too happy to share my familiarity here with friends.

You really make it seem so easy with your presentation however I find this topic to be really something which I think I might never understand. It kind of feels too complicated and very large for me. I am taking a look forward in your next submit, I will try to get the cling of it!

Nice post. I learn something new and challenging on sites I stumbleupon every day. It will always be interesting to read content from other writers and practice a little something from their websites.

Simply want to say your article is as surprising. The clearness for your submit is simply cool and i can assume you are a professional in this subject. Well together with your permission allow me to seize your RSS feed to stay up to date with forthcoming post. Thank you a million and please keep up the rewarding work.

Wow, incredible blog layout! How long have you been blogging for? you make blogging look easy. The overall look of your site is magnificent, let alone the content!

I have read so many articles concerning the blogger lovers but this piece of writing is really a good post, keep it up.

Howdy! Do you use Twitter? I’d like to follow you if that would be ok. I’m definitely enjoying your blog and look forward to new updates.

Wonderful web site. Lots of useful information here. I’m sending it to a few buddies ans also sharing in delicious. And naturally, thank you for your effort!

There is definately a lot to learn about this subject. I love all the points you made.

Hmm is anyone else experiencing problems with the images on this blog loading? I’m trying to find out if its a problem on my end or if it’s the blog. Any feedback would be greatly appreciated.

An intriguing discussion is worth comment. I do think that you should write more on this issue, it might not be a taboo subject but generally people don’t speak about such topics. To the next! Many thanks!!

I blog quite often and I truly appreciate your content. This article has really peaked my interest. I am going to book mark your site and keep checking for new information about once a week. I subscribed to your RSS feed as well.

I couldn’t resist commenting. Perfectly written!

Excellent article. I certainly love this website. Continue the good work!

My coder is trying to persuade me to move to .net from PHP. I have always disliked the idea because of the expenses. But he’s tryiong none the less. I’ve been using Movable-type on a number of websites for about a year and am nervous about switching to another platform. I have heard great things about blogengine.net. Is there a way I can transfer all my wordpress content into it? Any kind of help would be really appreciated!

It’s going to be finish of mine day, but before end I am reading this impressive post to increase my experience.

Thank you for any other great article. Where else may just anyone get that kind of information in such a perfect method of writing? I have a presentation next week, and I am at the look for such information.

My brother suggested I might like this website. He was totally right. This post actually made my day. You cann’t imagine just how much time I had spent for this information! Thanks!

Hi, I think your website might be having browser compatibility issues. When I look at your blog in Firefox, it looks fine but when opening in Internet Explorer, it has some overlapping. I just wanted to give you a quick heads up! Other then that, superb blog!

Way cool! Some very valid points! I appreciate you writing this post and also the rest of the site is extremely good.

Oh my goodness! Incredible article dude! Thanks, However I am going through issues with your RSS. I don’t know why I can’t subscribe to it. Is there anyone else getting identical RSS problems? Anyone who knows the solution will you kindly respond? Thanx!!

Howdy would you mind stating which blog platform you’re working with? I’m planning to start my own blog in the near future but I’m having a difficult time making a decision between BlogEngine/Wordpress/B2evolution and Drupal. The reason I ask is because your design and style seems different then most blogs and I’m looking for something completely unique. P.S My apologies for getting off-topic but I had to ask!

Currently it sounds like Drupal is the top blogging platform out there right now. (from what I’ve read) Is that what you’re using on your blog?

It’s remarkable to pay a visit this web site and reading the views of all friends concerning this post, while I am also eager of getting familiarity.

Hi my family member! I want to say that this article is awesome, great written and come with almost all important infos. I’d like to see more posts like this .

Ahaa, its good discussion about this piece of writing here at this weblog, I have read all that, so now me also commenting here.

It’s wonderful that you are getting ideas from this article as well as from our discussion made at this place.

Hi there! Someone in my Myspace group shared this site with us so I came to take a look. I’m definitely enjoying the information. I’m book-marking and will be tweeting this to my followers! Fantastic blog and terrific design and style.

It’s a shame you don’t have a donate button! I’d most certainly donate to this brilliant blog! I suppose for now i’ll settle for book-marking and adding your RSS feed to my Google account. I look forward to brand new updates and will talk about this site with my Facebook group. Chat soon!

Hello, I check your blogs regularly. Your writing style is awesome, keep up the good work!

I’m really enjoying the design and layout of your blog. It’s a very easy on the eyes which makes it much more enjoyable for me to come here and visit more often. Did you hire out a designer to create your theme? Fantastic work!

I like it when folks come together and share thoughts. Great website, keep it up!

Для вас частный эротический массаж Москва релакс спа

I’m not sure where you are getting your info, but good topic. I needs to spend some time learning more or understanding more. Thanks for fantastic information I was looking for this information for my mission.

Pingback: คาสิโนสด PLAYTECH

Pingback: Realistic tattoo Khao Lak

Unquestionably believe that which you stated. Your favorite justification appeared to be on the net the simplest thing to be aware of. I say to you, I definitely get irked while people consider worries that they plainly do not know about. You managed to hit the nail upon the top and also defined out the whole thing without having side effect , people can take a signal. Will likely be back to get more. Thanks

I’m not sure why but this site is loading incredibly slow for me. Is anyone else having this issue or is it a problem on my end? I’ll check back later and see if the problem still exists.

Unquestionably believe that which you stated. Your favorite justification appeared to be on the net the simplest thing to be aware of. I say to you, I definitely get irked while people consider worries that they plainly do not know about. You managed to hit the nail upon the top as well as defined out the whole thing without having side effect , people can take a signal. Will likely be back to get more. Thanks

Hi there, yup this piece of writing is actually pleasant and I have learned lot of things from it about blogging. thanks.

Thank you for sharing your info. I truly appreciate your efforts and I am waiting for your next post thank you once again.

Hi there to every body, it’s my first pay a visit of this webpage; this website consists of awesome and actually fine stuff for readers.

Hi there friends, its great post concerning tutoringand completely explained, keep it up all the time.

Howdy! Someone in my Myspace group shared this site with us so I came to look it over. I’m definitely enjoying the information. I’m book-marking and will be tweeting this to my followers! Fantastic blog and fantastic style and design.

Hello there, simply turned into aware of your blog thru Google, and found that it is really informative. I’m gonna watch out for brussels. I will appreciate in case you continue this in future. Lots of other folks shall be benefited from your writing. Cheers!

It is perfect time to make a few plans for the longer term and it is time to be happy. I have read this post and if I may I want to suggest you few interesting things or suggestions. Perhaps you could write next articles referring to this article. I wish to read more things approximately it!

Hi there to every body, it’s my first visit of this webpage; this weblog consists of awesome and actually fine data designed for readers.

I am curious to find out what blog system you happen to be utilizing? I’m experiencing some minor security problems with my latest website and I would like to find something more risk-free. Do you have any suggestions?

These are really enormous ideas in concerning blogging. You have touched some nice factors here. Any way keep up wrinting.

Saved as a favorite, I like your web site!

Heya i’m for the primary time here. I came across this board and I in finding It truly useful & it helped me out a lot. I hope to provide something back and help others like you helped me.

Its like you read my mind! You seem to understand so much approximately this, like you wrote the book in it or something. I feel that you could do with some % to drive the message house a bit, however other than that, this is great blog. A great read. I’ll definitely be back.

Hi friends, nice post and pleasant arguments commented here, I am really enjoying by these.

It’s enormous that you are getting ideas from this piece of writing as well as from our argument made at this place.

Wow that was strange. I just wrote an extremely long comment but after I clicked submit my comment didn’t show up. Grrrr… well I’m not writing all that over again. Anyways, just wanted to say superb blog!

Ahaa, its pleasant conversation concerning this article here at this weblog, I have read all that, so now me also commenting here.

Remarkable things here. I’m very satisfied to see your article. Thank you so much and I’m taking a look forward to touch you. Will you please drop me a mail?

Your style is so unique compared to other people I have read stuff from. Thanks for posting when you have the opportunity, Guess I will just bookmark this web site.

Excellent post. I used to be checking continuously this blog and I am inspired! Very useful information specially the ultimate phase 🙂 I maintain such info a lot. I used to be seeking this particular info for a long timelong time. Thank you and good luck.

Currently it seems like Expression Engine is the best blogging platform out there right now. (from what I’ve read) Is that what you’re using on your blog?

Amazing blog! Is your theme custom made or did you download it from somewhere? A design like yours with a few simple adjustements would really make my blog shine. Please let me know where you got your design. Many thanks

I am extremely impressed with your writing skills and also with the layout on your blog. Is this a paid theme or did you customize it yourself? Either way keep up the nice quality writing, it’s rare to see a nice blog like this one nowadays.

Aw, this was a very nice post. Spending some time and actual effort to make a top notch article but what can I say I put things off a lot and never seem to get anything done.

You’ve made some decent points there. I looked on the internet to find out more about the issue and found most individuals will go along with your views on this site.

Very soon this web site will be famous among all blogging and site-building viewers, due to it’s pleasant posts

I like the valuable information you provide in your articles. I will bookmark your weblog and check again here frequently. I am quite certain I will learn lots of new stuff right here! Good luck for the next!

I am really loving the theme/design of your site. Do you ever run into any web browser compatibility problems? A number of my blog audience have complained about my blog not operating correctly in Explorer but looks great in Opera. Do you have any ideas to help fix this issue?

Incredible points. Solid arguments. Keep up the amazing work.

Your style is really unique compared to other people I have read stuff from. Many thanks for posting when you have the opportunity, Guess I will just bookmark this web site.

This is my first time pay a visit at here and i am in fact happy to read all at one place.

This is a topic that’s close to my heart… Cheers! Where are your contact details though?

Im not that much of a online reader to be honest but your blogs really nice, keep it up! I’ll go ahead and bookmark your site to come back down the road. Cheers

I would like to thank you for the efforts you have put in writing this website. I am hoping to view the same high-grade blog posts from you in the future as well. In fact, your creative writing abilities has inspired me to get my very own blog now 😉

Appreciating the hard work you put into your website and in depth information you present. It’s great to come across a blog every once in a while that isn’t the same unwanted rehashed material. Fantastic read! I’ve saved your site and I’m including your RSS feeds to my Google account.

I was recommended this blog by my cousin. I am not sure whether this post is written by him as no one else know such detailed about my problem. You are wonderful! Thanks!

Hi there! I just wanted to ask if you ever have any problems with hackers? My last blog (wordpress) was hacked and I ended up losing months of hard work due to no data backup. Do you have any solutions to protect against hackers?

Онлайн казино радует своих посетителей более чем двумя тысячами увлекательных игр от ведущих разработчиков.

Whoa! This blog looks exactly like my old one! It’s on a entirely different topic but it has pretty much the same layout and design. Great choice of colors!

Pretty component of content. I simply stumbled upon your blog and in accession capital to claim that I acquire in fact enjoyed account your blog posts. Any way I’ll be subscribing on your augment or even I achievement you get right of entry to consistently fast.

Simply want to say your article is as astonishing. The clearness in your post is simply excellent and i can assume you are an expert on this subject. Well with your permission allow me to grab your RSS feed to keep up to date with forthcoming post. Thanks a million and please keep up the gratifying work.

I have read so many posts regarding the blogger lovers except this article is truly a good piece of writing, keep it up.

It’s not my first time to pay a visit this web site, i am visiting this site dailly and take good data from here daily.

Нужна стяжка пола в Москве, но вы не знаете, как выбрать подрядчика? Обратитесь к нам на сайт styazhka-pola24.ru! Мы предлагаем услуги по устройству стяжки пола любой площади и сложности, а также гарантируем быстрое и качественное выполнение работ.

поставка материалов на строительные объекты

Хотите заказать механизированную штукатурку стен в Москве, но не знаете, где искать надежного подрядчика? Обратитесь к нам на сайт mehanizirovannaya-shtukaturka-moscow.ru! Мы предоставляем услуги по оштукатуриванию стен механизированным способом, а также гарантируем качество и надежность.

Unquestionably believe that which you stated. Your favorite justification appeared to be on the net the simplest thing to be aware of. I say to you, I definitely get irked while people consider worries that they plainly do not know about. You managed to hit the nail upon the top and also defined out the whole thing without having side effect , people can take a signal. Will likely be back to get more. Thanks

For newest news you have to go to see world-wide-web and on web I found this web site as a most excellent website for newest updates.

Hmm is anyone else experiencing problems with the images on this blog loading? I’m trying to find out if its a problem on my end or if it’s the blog. Any feedback would be greatly appreciated.

Оштукатуривание стен всегда было заботой, но не с mehanizirovannaya-shtukaturka-moscow.ru. Услуги, которые меняют представления о ремонте.

Excellent post. I used to be checking continuously this blog and I am inspired! Very useful information particularly the remaining part 🙂 I deal with such info a lot. I used to be seeking this particular info for a long timelong time. Thank you and good luck.

I simply could not leave your web site prior to suggesting that I really enjoyed the standard information a person supply in your visitors? Is going to be back frequently in order to check up on new posts

The other day, while I was at work, my sister stole my iPad and tested to see if it can survive a forty foot drop, just so she can be a youtube sensation. My iPad is now broken and she has 83 views. I know this is entirely off topic but I had to share it with someone!

Hi all, here every one is sharing these experience, so it’s nice to read this weblog, and I used to pay a visit this website everyday.

Hey There. I found your blog the use of msn. This is a very smartly written article. I will be sure to bookmark it and come back to read more of your useful information. Thank you for the post. I will definitely comeback.

What’s up to all, how is everything, I think every one is getting more from this site, and your views are nice for new users.

I believe what you postedtypedsaidthink what you postedtypedbelieve what you postedwrotebelieve what you postedtypedWhat you postedtyped was very logicala lot of sense. But, what about this?think about this, what if you were to write a killer headlinetitle?content?wrote a catchier title? I ain’t saying your content isn’t good.ain’t saying your content isn’t gooddon’t want to tell you how to run your blog, but what if you added a titlesomethingheadlinetitle that grabbed people’s attention?maybe get a person’s attention?want more? I mean %BLOG_TITLE% is a little plain. You ought to peek at Yahoo’s home page and see how they createwrite post headlines to get viewers interested. You might add a related video or a pic or two to get readers interested about what you’ve written. Just my opinion, it could bring your postsblog a little livelier.

Hello very nice web site!! Guy .. Beautiful .. Amazing .. I will bookmark your blog and take the feeds also? I am satisfied to seek out numerous useful information here in the publish, we’d like develop more strategies in this regard, thank you for sharing. . . . . .

It’s really a cool and helpful piece of information. I’m glad that you simply shared this helpful info with us. Please stay us informed like this. Thank you for sharing.

Hello! This is my first visit to your blog! We are a collection of volunteers and starting a new initiative in a community in the same niche. Your blog provided us valuable information to work on. You have done a extraordinary job!

Excellent blog here! Also your website lots up fast! What host are you using? Can I am getting your associate link on your host? I want my site loaded up as fast as yours lol

Remarkable! Its actually awesome post, I have got much clear idea concerning from this article.

Every weekend i used to go to see this website, as i want enjoyment, since this this web site conations truly pleasant funny data too.

Hi to all, the contents present at this web site are actually awesome for people experience, well, keep up the nice work fellows.

My spouse and I stumbled over here coming from a different web page and thought I might check things out. I like what I see so now i’m following you. Look forward to exploring your web page for a second time.

Hi, after reading this awesome post i am too cheerful to share my familiarity here with mates.

Hi, I do believe this is an excellent website. I stumbledupon it 😉 I am going to come back once again since I book-marked it. Money and freedom is the best way to change, may you be rich and continue to help other people.

hey there and thank you for your information I’ve definitely picked up anything new from right here. I did however expertise a few technical issues using this web site, since I experienced to reload the site a lot of times previous to I could get it to load properly. I had been wondering if your web hosting is OK? Not that I am complaining, but sluggish loading instances times will very frequently affect your placement in google and can damage your quality score if advertising and marketing with Adwords. Anyway I’m adding this RSS to my e-mail and can look out for a lot more of your respective fascinating content. Make sure you update this again soon.

Wow, incredible blog layout! How long have you been blogging for? you make blogging glance easy. The full glance of your web site is fantastic, let alonewell as the content!

Highly energetic blog, I enjoyed that a lot. Will there be a part 2?

Игра Lucky Jet на деньги – это не только развлечение, но и шанс для дополнительного дохода. Открывайте стратегии выигрыша на сайте букмекера 1win.

Fine way of describing, and nice post to take information regarding my presentation topic, which i am going to convey in university.

I was recommended this website by my cousin. I am not sure whether this post is written by him as no one else know such detailed about my problem. You are wonderful! Thanks!

Thank you for the auspicious writeup. It in fact was a amusement account it. Look advanced to far added agreeable from you! By the way, how can we communicate?

Hey I am so glad I found your site, I really found you by mistake, while I was researching on Aol for something else, Nonetheless I am here now and would just like to say cheers for a incredible post and a all round enjoyable blog (I also love the theme/design), I don’t have time to read through it all at the minute but I have saved it and also included your RSS feeds, so when I have time I will be back to read a great deal more, Please do keep up the excellent job.

I believe what you postedwrotebelieve what you postedtypedthink what you postedwrotesaidbelieve what you postedwrotesaidWhat you postedtyped was very logicala bunch of sense. But, what about this?think about this, what if you were to write a killer headlinetitle?content?wrote a catchier title? I ain’t saying your content isn’t good.ain’t saying your content isn’t gooddon’t want to tell you how to run your blog, but what if you added a titlesomethingheadlinetitle that grabbed a person’s attention?maybe get a person’s attention?want more? I mean %BLOG_TITLE% is a little plain. You might look at Yahoo’s home page and watch how they createwrite news headlines to get viewers interested. You might add a related video or a related pic or two to get readers interested about what you’ve written. Just my opinion, it might bring your postsblog a little livelier.

I read this article fully concerning the comparison of most up-to-date and previous technologies, it’s remarkable article.

Hi there, for all time i used to check website posts here early in the dawn, since i like to gain knowledge of more and more.

Pretty great post. I simply stumbled upon your blog and wanted to mention that I have really enjoyed browsing your blog posts. In any case I’ll be subscribing on your feed and I hope you write again soon!

I love your blog.. very nice colors & theme. Did you create this website yourself or did you hire someone to do it for you? Plz answer back as I’m looking to design my own blog and would like to know where u got this from. kudos

What i do not realize is in fact how you’re now not really a lot more smartly-favored than you may be right now. You are so intelligent. You realize therefore significantly when it comes to this matter, produced me individually consider it from numerous various angles. Its like men and women don’t seem to be interested until it’s something to accomplish with Woman gaga! Your personal stuffs excellent. Always deal with it up!

Hi, I do believe this is an excellent web site. I stumbledupon it 😉 I’m going to come back once again since I bookmarked it. Money and freedom is the best way to change, may you be rich and continue to help other people.

Aw, this was a really nice post. Spending some time and actual effort to create a top notch article but what can I say I procrastinate a lot and never seem to get anything done.

I wanted to thank you for this excellent read!! I certainly enjoyed every little bit of it. I have you bookmarked to check out new stuff you post

Hi to all, it’s in fact a nice for me to visit this website, it contains precious Information.

I think this is one of the most important information for me. And i’m glad reading your article. But want to remark on few general things, The site style is great, the articles is really nice : D. Good job, cheers

I’ve been exploring for a little for any high-quality articles or blog posts in this kind of space . Exploring in Yahoo I finally stumbled upon this web site. Reading this info So i’m satisfied to express that I have a very good uncanny feeling I found out exactly what I needed. I so much certainly will make certain to don?t fail to remember this site and give it a look on a continuing basis.

Pretty! This was an extremely wonderful post. Thank you for providing this information.

Hi there, always i used to check webpage posts here early in the morning, as i like to learn more and more.

Hey there, You have done a fantastic job. I will definitely digg it and personally recommend to my friends. I am sure they will be benefited from this web site.

Have you ever thought about publishing an e-book or guest authoring on other sites? I have a blog centered on the same information you discuss and would really like to have you share some stories/information. I know my audience would enjoy your work. If you are even remotely interested, feel free to send me an e mail.

Hi, I check your new stuff like every week. Your writing style is awesome, keep up the good work!

Good post. I am going through some of these issues as well..

Hi! Someone in my Myspace group shared this site with us so I came to take a look. I’m definitely enjoying the information. I’m book-marking and will be tweeting this to my followers! Terrific blog and brilliant design and style.

I know this web site gives quality dependent posts and other stuff, is there any other web site which provides these things in quality?

Highly energetic blog, I liked that a lot. Will there be a part 2?

I’m impressed, I must say. Rarely do I encounter a blog that’s both educative and entertaining, and let me tell you, you have hit the nail on the head. The issue is something which not enough people are speaking intelligently about. I’m very happy that I stumbled across this in my search for something concerning this.

Hello! I know this is kinda off topic but I was wondering if you knew where I could get a captcha plugin for my comment form? I’m using the same blog platform as yours and I’m having difficulty finding one? Thanks a lot!

I take pleasure in, cause I found exactly what I used to be taking a look for. You have ended my 4 day long hunt! God Bless you man. Have a nice day. Bye

Spot on with this write-up, I truly believe this site needs much more attention. I’ll probably be back again to read more, thanks for the info!

My spouse and I stumbled over here coming from a different page and thought I might check things out. I like what I see so now i’m following you. Look forward to finding out about your web page for a second time.

Do you have a spam issue on this website; I also am a blogger, and I was wanting to know your situation; many of us have created some nice procedures and we are looking to swap solutions with other folks, why not shoot me an e-mail if interested.

Hi there! This is my first visit to your blog! We are a collection of volunteers and starting a new initiative in a community in the same niche. Your blog provided us valuable information to work on. You have done a marvellous job!

It’s really very difficult in this busy life to listen news on TV, thus I only use internet for that purpose, and get the most recent news.

Hello there! This post couldn’t be written any better! Reading this post reminds me of my good old room mate! He always kept talking about this. I will forward this article to him. Pretty sure he will have a good read. Thank you for sharing!

Appreciate the recommendation. Will try it out.

I for all time emailed this webpage post page to all my associates, as if like to read it after that my links will too.

Nice blog! Is your theme custom made or did you download it from somewhere? A design like yours with a few simple adjustements would really make my blog shine. Please let me know where you got your design. Thanks a lot

It’s hard to find knowledgeable people about this topic, but you sound like you know what you’re talking about! Thanks

When someone writes an article he/she maintains the idea of a user in his/her mind that how a user can understand it. Thus that’s why this piece of writing is amazing. Thanks!

Write more, thats all I have to say. Literally, it seems as though you relied on the video to make your point. You clearly know what youre talking about, why waste your intelligence on just posting videos to your site when you could be giving us something enlightening to read?

This is the right site for anyone who really wants to find out about this topic. You understand so much its almost hard to argue with you (not that I personally would want toHaHa). You definitely put a brand new spin on a topic that’s been written about for years. Great stuff, just excellent!

Hi to every one, it’s truly a nice for me to visit this site, it contains valuable Information.

Howdy! Someone in my Myspace group shared this site with us so I came to take a look. I’m definitely enjoying the information. I’m book-marking and will be tweeting this to my followers! Wonderful blog and excellent design and style.

This website truly has all of the info I wanted about this subject and didn’t know who to ask.

Why users still use to read news papers when in this technological world everything is accessible on net?

My partner and I absolutely love your blog and find a lot of your post’s to be exactly what I’m looking for. Would you offer guest writers to write content available for you? I wouldn’t mind composing a post or elaborating on a lot of the subjects you write concerning here. Again, awesome weblog!

If some one needs expert view regarding blogging then i propose him/her to go to see this website, Keep up the pleasant job.

Hey there! I know this is kinda off topic but I was wondering which blog platform are you using for this site? I’m getting tired of WordPress because I’ve had issues with hackers and I’m looking at options for another platform. I would be great if you could point me in the direction of a good platform.

WOW just what I was searching for. Came here by searching for %keyword%

Thanks for ones marvelous posting! I definitely enjoyed reading it, you are a great author.I will ensure that I bookmark your blog and will come back sometime soon. I want to encourage you to definitely continue your great job, have a nice holiday weekend!

Wow that was odd. I just wrote an extremely long comment but after I clicked submit my comment didn’t show up. Grrrr… well I’m not writing all that over again. Anyway, just wanted to say wonderful blog!

Great blog you have here.. It’s hard to find high-quality writing like yours these days. I really appreciate people like you! Take care!!

What’s up i am kavin, its my first time to commenting anywhere, when i read this article i thought i could also make comment due to this sensible piece of writing.

For latest news you have to pay a visit web and on web I found this web site as a best web site for latest updates.

Aw, this was an incredibly nice post. Taking the time and actual effort to create a good article but what can I say I put things off a lot and never seem to get anything done.

This site really has all of the information I wanted about this subject and didn’t know who to ask.

Hi there! This article couldn’t be written any better! Going through this post reminds me of my previous roommate! He always kept talking about this. I am going to forward this information to him. Pretty sure he will have a very good read. Thank you for sharing!

Wow, superb blog layout! How long have you been blogging for? you make blogging look easy. The overall look of your site is great, let alone the content!

constantly i used to read smaller articles which also clear their motive, and that is also happening with this article which I am reading here.

This is a topic that’s close to my heart… Take care! Where are your contact details though?

I pay a visit day-to-day some web sites and blogs to read posts, but this webpage gives quality based content.

I was able to find good information from your blog posts.

What i do not realize is actually how you’re not really a lot more smartly-liked than you may be right now. You are so intelligent. You know therefore significantly on the subject of this topic, produced me in my opinion consider it from so many numerous angles. Its like men and women aren’t interested until it’s something to accomplish with Woman gaga! Your personal stuffs excellent. Always maintain it up!

I really love your blog.. Pleasant colors & theme. Did you make this site yourself? Please reply back as I’m hoping to create my own blog and want to know where you got this from or what the theme is called. Many thanks!

Hi, i think that i saw you visited my website so i came to return the favor.I am trying to find things to improve my site!I suppose its ok to use some of your ideas!!

Why users still use to read news papers when in this technological world all is existing on net?

Wow, that’s what I was looking for, what a information! present here at this weblog, thanks admin of this website.

constantly i used to read smaller articles or reviews which also clear their motive, and that is also happening with this article which I am reading here.

Hi there everyone, it’s my first go to see at this website, and post is in fact fruitful for me, keep up posting these articles.

I’ve read several good stuff here. Definitely worth bookmarking for revisiting. I wonder how so much attempt you set to create this type of magnificent informative web site.

I am regular reader, how are you everybody? This post posted at this site is in fact nice.

You really make it seem so easy with your presentation but I find this topic to be really something which I think I would never understand. It seems too complicated and very broad for me. I am looking forward for your next post, I will try to get the hang of it!

Hi there I am so grateful I found your site, I really found you by error, while I was browsing on Google for something else, Nonetheless I am here now and would just like to say cheers for a remarkable post and a all round thrilling blog (I also love the theme/design), I don’t have time to go through it all at the minute but I have saved it and also added in your RSS feeds, so when I have time I will be back to read a lot more, Please do keep up the fantastic job.

Hey! I know this is somewhat off topic but I was wondering which blog platform are you using for this site? I’m getting fed up of WordPress because I’ve had issues with hackers and I’m looking at options for another platform. I would be awesome if you could point me in the direction of a good platform.

Hi I am so glad I found your weblog, I really found you by error, while I was browsing on Askjeeve for something else, Regardless I am here now and would just like to say kudos for a marvelous post and a all round interesting blog (I also love the theme/design), I dont have time to look over it all at the minute but I have saved it and also added in your RSS feeds, so when I have time I will be back to read a lot more, Please do keep up the superb b.

If you wish for to get a great deal from this piece of writing then you have to apply such strategies to your won webpage.

Hi there to every one, it’s actually a good for me to go to see this web site, it consists of valuable Information.

Appreciate the recommendation. Will try it out.

Hi, after reading this awesome piece of writing i am also cheerful to share my familiarity here with mates.

Wow that was odd. I just wrote an extremely long comment but after I clicked submit my comment didn’t show up. Grrrr… well I’m not writing all that over again. Anyway, just wanted to say fantastic blog!

I couldn’t resist commenting. Perfectly written!

We are a gaggle of volunteers and starting a new scheme in our community. Your site provided us with helpful information to work on. You have performed an impressive task and our whole community can be grateful to you.

Having read this I thought it was extremely informative. I appreciate you taking the time and effort to put this informative article together. I once again find myself spending way too much time both reading and leaving comments. But so what, it was still worth it!

It’s very easy to find out any topic on net as compared to books, as I found this piece of writing at this website.

My brother suggested I might like this blog. He used to be totally right. This publish actually made my day. You cann’t consider just how so much time I had spent for this information! Thank you!

I know this site provides quality dependent articles and other stuff, is there any other site which gives such information in quality?

Now I am going to do my breakfast, later than having my breakfast coming yet again to read further news.

Have you ever considered about including a little bit more than just your articles? I mean, what you say is valuable and all. However think of if you added some great visuals or video clips to give your posts more, “pop”! Your content is excellent but with images and clips, this website could undeniably be one of the most beneficial in its niche. Good blog!

Terrific article! This is the type of information that are supposed to be shared around the internet. Disgrace on the seek engines for not positioning this submit upper! Come on over and visit my web site . Thank you =)

Hi fantastic blog! Does running a blog like this take a great deal of work? I have very little expertise in programming but I was hoping to start my own blog soon. Anyways, if you have any suggestions or tips for new blog owners please share. I know this is off topic but I just had to ask. Thanks!

I know this if off topic but I’m looking into starting my own blog and was wondering what all is required to get set up? I’m assuming having a blog like yours would cost a pretty penny? I’m not very internet savvy so I’m not 100% positive. Any recommendations or advice would be greatly appreciated. Thanks

Greetings! Very helpful advice within this article! It is the little changes that make the most important changes. Thanks a lot for sharing!

Hi I am so glad I found your weblog, I really found you by mistake, while I was researching on Askjeeve for something else, Regardless I am here now and would just like to say kudos for a marvelous post and a all round enjoyable blog (I also love the theme/design), I don’t have time to look over it all at the minute but I have saved it and also included your RSS feeds, so when I have time I will be back to read much more, Please do keep up the excellent job.

Hey There. I found your blog using msn. This is an extremely well written article. I will be sure to bookmark it and come back to read more of your useful information. Thanks for the post. I will definitely comeback.

You’re so awesome! I don’t think I have read anything like this before. So good to find someone with some unique thoughts on this topic. Really.. thank you for starting this up. This site is something that’s needed on the web, someone with some originality!

I have fun with, lead to I found exactly what I used to be taking a look for. You have ended my 4 day long hunt! God Bless you man. Have a nice day. Bye

Every weekend i used to visit this site, because i want enjoyment, since this this site conations really nice funny data too.

Hello there, You have performed an excellent job. I will definitely digg it and for my part recommend to my friends. I am sure they will be benefited from this site.

When I originally commented I clicked the “Notify me when new comments are added” checkbox and now each time a comment is added I get four emails with the same comment. Is there any way you can remove me from that service? Cheers!

It’s actually a nice and helpful piece of information. I’m glad that you simply shared this helpful info with us. Please stay us informed like this. Thanks for sharing.

This is my first time pay a visit at here and i am truly impressed to read all at alone place.

Simply want to say your article is as amazing. The clearness for your publish is simply spectacular and i can assume you are knowledgeable in this subject. Well with your permission allow me to seize your RSS feed to stay up to date with coming near near post. Thank you a million and please continue the rewarding work.

Ahaa, its pleasant discussion regarding this post here at this weblog, I have read all that, so now me also commenting here.

Hello there, simply turned into aware of your blog through Google, and found that it is really informative. I’m gonna watch out for brussels. I will appreciate when you continue this in future. A lot of folks can be benefited from your writing. Cheers!

I was more than happy to discover this site. I wanted to thank you for your time due to this wonderful read!! I definitely liked every little bit of it and I have you bookmarked to check out new stuff on your blog.

I’ve read several just right stuff here. Definitely worth bookmarking for revisiting. I wonder how much attempt you put to create such a magnificent informative web site.

You made some decent points there. I looked on the web for more information about the issue and found most individuals will go along with your views on this website.

Hello there, You have performed an excellent job. I will definitely digg it and individually recommend to my friends. I am sure they will be benefited from this site.

If some one desires to be updated with newest technologies after that he must be visit this website and be up to date everyday.

I know this web site gives quality dependent posts and additional stuff, is there any other web site which gives such things in quality?

I feel this is one of the so much significant information for me. And i’m satisfied reading your article. However want to observation on few common things, The site taste is great, the articles is in reality nice : D. Just right activity, cheers

Hi! Someone in my Myspace group shared this site with us so I came to give it a look. I’m definitely enjoying the information. I’m book-marking and will be tweeting this to my followers! Terrific blog and amazing style and design.

It’s a shame you don’t have a donate button! I’d certainly donate to this fantastic blog! I suppose for now i’ll settle for book-marking and adding your RSS feed to my Google account. I look forward to fresh updates and will talk about this blog with my Facebook group. Chat soon!

Hey, I think your blog might be having browser compatibility issues. When I look at your blog site in Safari, it looks fine but when opening in Internet Explorer, it has some overlapping. I just wanted to give you a quick heads up! Other then that, great blog!

What’s up everybody, here every one is sharing such experience, so it’s good to read this blog, and I used to go to see this webpage everyday.

Hey I know this is off topic but I was wondering if you knew of any widgets I could add to my blog that automatically tweet my newest twitter updates. I’ve been looking for a plug-in like this for quite some time and was hoping maybe you would have some experience with something like this. Please let me know if you run into anything. I truly enjoy reading your blog and I look forward to your new updates.

We are a group of volunteers and starting a new scheme in our community. Your site provided us with valuable information to work on. You have done an impressive job and our whole community will be grateful to you.

I visit day-to-day some sites and information sites to read articles or reviews, but this weblog offers quality based posts.

What’s up to every one, since I am in fact keen of reading this blog’s post to be updated regularly. It includes good stuff.

I’ve read several just right stuff here. Definitely worth bookmarking for revisiting. I wonder how so much attempt you set to create this kind of great informative web site.

Every weekend i used to visit this website, because i want enjoyment, as this this website conations in fact good funny data too.

Please let me know if you’re looking for a author for your weblog. You have some really great posts and I believe I would be a good asset. If you ever want to take some of the load off, I’d absolutely love to write some articles for your blog in exchange for a link back to mine. Please send me an e-mail if interested. Cheers!

Do you have a spam issue on this website; I also am a blogger, and I was curious about your situation; many of us have created some nice methods and we are looking to trade strategies with other folks, why not shoot me an e-mail if interested.

I simply could not leave your web site prior to suggesting that I extremely enjoyed the standard information a person supply on your visitors? Is going to be back regularly in order to check out new posts

I will right away grasp your rss as I can not find your email subscription link or newsletter service. Do you have any? Please allow me recognise so that I may subscribe. Thanks.

I was extremely pleased to find this site. I want to to thank you for your time for this wonderful read!! I definitely appreciated every little bit of it and I have you book marked to see new stuff on your web site.

I feel this is one of the so much important information for me. And i’m glad reading your article. However want to statement on few basic things, The website taste is great, the articles is in point of fact nice : D. Good process, cheers

I am regular reader, how are you everybody? This post posted at this site is in fact good.

This is a good tip especially to those new to the blogosphere. Brief but very accurate information Many thanks for sharing this one. A must read article!

We are a group of volunteers and starting a new scheme in our community. Your web site provided us with valuable information to work on. You have done an impressive job and our whole community will be grateful to you.

I am sure this post has touched all the internet users, its really really nice post on building up new webpage.

Hi, Neat post. There is a problem with your web site in internet explorer, might check this? IE still is the marketplace leader and a big portion of other people will leave out your wonderful writing due to this problem.

Hi everybody, here every one is sharing such knowledge, thus it’s pleasant to read this weblog, and I used to pay a visit this webpage daily.

Hi, Neat post. There is a problem together with your site in internet explorer, may check this? IE still is the marketplace leader and a large component to other folks will leave out your fantastic writing due to this problem.

Nice post. I was checking continuously this blog and I am impressed! Very useful information specially the last part 🙂 I care for such info a lot. I was seeking this particular info for a long time. Thank you and good luck.

When I originally commented I clicked the “Notify me when new comments are added” checkbox and now each time a comment is added I get four emails with the same comment. Is there any way you can remove me from that service? Thanks!

This is the right web site for anybody who wishes to find out about this topic. You understand so much its almost hard to argue with you (not that I actually would want toHaHa). You definitely put a new spin on a topic that has been written about for years. Excellent stuff, just great!

Appreciation to my father who informed me about this website, this website is truly awesome.

Hi Dear, are you actually visiting this site regularly, if so then you will definitely take pleasant knowledge.

What’s up, this weekend is pleasant in favor of me, as this point in time i am reading this impressive informative post here at my home.

I’ve read several just right stuff here. Definitely worth bookmarking for revisiting. I wonder how much attempt you put to create one of these magnificent informative web site.

This is a topic that is close to my heart… Best wishes! Where are your contact details though?

I love your blog.. very nice colors & theme. Did you design this website yourself or did you hire someone to do it for you? Plz reply as I’m looking to design my own blog and would like to know where u got this from. thanks a lot

Great article! This is the type of information that are supposed to be shared around the web. Disgrace on the seek engines for now not positioning this post upper! Come on over and talk over with my web site . Thank you =)

This is a good tip especially to those new to the blogosphere. Short but very accurate information Thank you for sharing this one. A must read article!

I am in fact thankful to the owner of this website who has shared this great piece of writing at at this place.

Excellent post however , I was wondering if you could write a litte more on this topic? I’d be very grateful if you could elaborate a little bit more. Kudos!

Hi, yeah this post is in fact pleasant and I have learned lot of things from it regarding blogging. thanks.

I like reading through a post that will make people think. Also, thank you for allowing me to comment!

I’m impressed, I must say. Rarely do I encounter a blog that’s equally educative and engaging, and let me tell you, you have hit the nail on the head. The issue is something that not enough people are speaking intelligently about. I am very happy that I found this in my search for something concerning this.

I like the valuable information you supply on your articles. I will bookmark your weblog and test again here frequently. I am relatively certain I will be informed lots of new stuff right here! Good luck for the following!

I like what you guys are up too. This type of clever work and exposure! Keep up the amazing works guys I’ve you guys to our blogroll.

Very rapidly this website will be famous among all blogging users, due to it’s good articles or reviews

Terrific article! This is the type of information that are meant to be shared around the internet. Disgrace on the seek engines for now not positioning this submit upper! Come on over and talk over with my web site . Thank you =)

Good way of describing, and good piece of writing to take information regarding my presentation subject matter, which i am going to deliver in university.

Thank you for another informative blog. Where else may just I am getting that kind of info written in such a perfect way? I have a project that I am simply now operating on, and I have been at the glance out for such information.

Now I am going to do my breakfast, when having my breakfast coming yet again to read additional news.

I blog frequently and I truly appreciate your content. This great article has really peaked my interest. I am going to bookmark your site and keep checking for new information about once a week. I subscribed to your RSS feed as well.

I enjoy reading a post that will make people think. Also, thanks for allowing for me to comment!

With havin so much written content do you ever run into any problems of plagorism or copyright violation? My website has a lot of completely unique content I’ve either created myself or outsourced but it looks like a lot of it is popping it up all over the web without my agreement. Do you know any methods to help reduce content from being ripped off? I’d truly appreciate it.

After looking at a number of the blog posts on your web site, I truly like your way of blogging. I book-marked it to my bookmark site list and will be checking back soon. Take a look at my web site as well and let me know how you feel.

This is my first time pay a visit at here and i am truly happy to read all at alone place.

Wow, wonderful blog structure! How lengthy have you ever been blogging for?

you made running a blog glance easy. The total glance of your web site is excellent, as neatly as

the content material! You can see similar here

sklep

I am truly thankful to the owner of this site who has shared this wonderful piece of writing at at this place.

One of the leading academic and scientific-research centers of the Belarus. There are 12 Faculties at the University, 2 scientific and research institutes. Higher education in 35 specialities of the 1st degree of education and 22 specialities.

Having read this I thought it was really informative. I appreciate you taking the time and effort to put this information together. I once again find myself spending a significant amount of time both reading and commenting. But so what, it was still worth it!

Hey! I could have sworn I’ve been to this site before but after reading through some of the post I realized it’s new to me. Anyways, I’m definitely happy I found it and I’ll be bookmarking and checking back often!

I’m impressed, I must say. Rarely do I encounter a blog that’s equally educative and engaging, and let me tell you, you have hit the nail on the head. The issue is something which not enough folks are speaking intelligently about. I’m very happy that I found this in my search for something relating to this.

Its like you read my mind! You seem to know so much about this, like you wrote the book in it or something. I think that you could do with some pics to drive the message home a bit, but other than that, this is magnificent blog. An excellent read. I’ll definitely be back.

This is very interesting, You are an overly professional blogger. I have joined your feed and look forward to in search of more of your fantastic post. Also, I have shared your web site in my social networks

Why users still use to read news papers when in this technological world everything is existing on net?

This website definitely has all of the info I wanted about this subject and didn’t know who to ask.

Your style is so unique compared to other people I have read stuff from. Thank you for posting when you have the opportunity, Guess I will just bookmark this site.

Hi, I believe your website could be having internet browser compatibility issues. When I look at your website in Safari, it looks fine however, if opening in Internet Explorer, it has some overlapping issues. I simply wanted to give you a quick heads up! Besides that, wonderful blog!

Greetings! Very helpful advice within this article! It is the little changes that produce the largest changes. Thanks a lot for sharing!

Автомойка самообслуживания под ключ – наше предложение для тех, кто ценит эффективность и инновации. С нашей помощью вы войдете в рынок быстро и без проблем.

I used to be recommended this website by means of my cousin. I am not sure whether this submit is written by means of him as no one else understand such precise approximately my problem. You are wonderful! Thank you!

What i do not realize is if truth be told how you’re now not really a lot more well-appreciated than you may be right now. You are so intelligent. You recognize therefore significantly relating to this topic, produced me personally consider it from numerous various angles. Its like men and women don’t seem to be fascinated unless it’s something to accomplish with Lady gaga! Your own stuffs nice. All the time deal with it up!

I know this site gives quality dependent articles or reviews and other information, is there any other site which offers these things in quality?

Your style is very unique compared to other people I have read stuff from. Thanks for posting when you have the opportunity, Guess I will just bookmark this site.

2zipgs

The initial bupropion dose was 150 mg once daily for 1 week, followed by up titration to 300 mg once daily priligy canada

clozapine will increase the level or effect of venetoclax by affecting hepatic intestinal enzyme CYP3A4 metabolism generic cytotec pill

Ищите в гугле

e5fzyn

zeylpx

In accordance with these findings, we demonstrate that silencing of ER beta gene expression reduced both protein and mRNA expression of p21 induced by mibolerone, while it increased both protein and mRNA expression of cyclin D1 reduced by mibolerone buy lasix online overnight delivery Particulate matter smaller than 2

63grq4

Проверенное и надежное казино – селектор казино сайт

qhjscq

pwsetp

5bvn89

yleuia

0hjqlu

7wyni2

1ddl5e

k4pyrl

cpjz76

ry1lch

analizador de vibraciones

Sistemas de equilibrado: fundamental para el rendimiento estable y productivo de las dispositivos.

En el entorno de la innovacion actual, donde la efectividad y la seguridad del equipo son de suma importancia, los dispositivos de ajuste juegan un rol crucial. Estos sistemas especificos estan disenados para balancear y estabilizar componentes moviles, ya sea en equipamiento de fabrica, transportes de transporte o incluso en electrodomesticos de uso diario.

Para los tecnicos en conservacion de sistemas y los tecnicos, utilizar con equipos de calibracion es crucial para garantizar el rendimiento estable y estable de cualquier aparato rotativo. Gracias a estas herramientas innovadoras modernas, es posible disminuir sustancialmente las oscilaciones, el zumbido y la esfuerzo sobre los rodamientos, prolongando la duracion de componentes importantes.

De igual manera trascendental es el rol que tienen los equipos de ajuste en la soporte al comprador. El asistencia experto y el mantenimiento regular aplicando estos aparatos posibilitan dar asistencias de excelente excelencia, aumentando la bienestar de los clientes.

Para los titulares de empresas, la aporte en equipos de ajuste y detectores puede ser fundamental para aumentar la rendimiento y rendimiento de sus sistemas. Esto es sobre todo importante para los inversores que manejan medianas y intermedias negocios, donde cada punto importa.

Tambien, los sistemas de equilibrado tienen una vasta uso en el sector de la proteccion y el gestion de calidad. Posibilitan encontrar probables errores, previniendo intervenciones elevadas y problemas a los aparatos. Ademas, los informacion extraidos de estos aparatos pueden usarse para mejorar metodos y incrementar la visibilidad en motores de busqueda.

Las areas de aplicacion de los aparatos de calibracion abarcan multiples sectores, desde la fabricacion de vehiculos de dos ruedas hasta el seguimiento de la naturaleza. No interesa si se considera de extensas producciones industriales o modestos talleres caseros, los dispositivos de calibracion son indispensables para promover un desempeno efectivo y libre de detenciones.

um8xrf

86e56o

3wgp17

I think the admin oof tuis website is actually working hard in support of his web site, since here every

dataa is quality based material. https://menbehealth.wordpress.com/

Norma ISO 10816

Aparatos de balanceo: importante para el funcionamiento estable y productivo de las maquinarias.

En el entorno de la innovación actual, donde la rendimiento y la fiabilidad del aparato son de gran relevancia, los dispositivos de balanceo cumplen un tarea esencial. Estos dispositivos especializados están diseñados para equilibrar y asegurar componentes rotativas, ya sea en equipamiento industrial, transportes de movilidad o incluso en equipos domésticos.

Para los expertos en reparación de dispositivos y los profesionales, utilizar con aparatos de equilibrado es fundamental para promover el operación suave y seguro de cualquier dispositivo rotativo. Gracias a estas herramientas innovadoras innovadoras, es posible limitar notablemente las vibraciones, el sonido y la presión sobre los rodamientos, aumentando la vida útil de piezas caros.

Asimismo importante es el papel que cumplen los sistemas de calibración en la soporte al usuario. El ayuda especializado y el conservación regular utilizando estos sistemas posibilitan ofrecer servicios de óptima excelencia, aumentando la satisfacción de los compradores.

Para los titulares de proyectos, la financiamiento en unidades de equilibrado y detectores puede ser clave para aumentar la productividad y eficiencia de sus aparatos. Esto es especialmente trascendental para los inversores que gestionan reducidas y medianas negocios, donde cada punto cuenta.

Por otro lado, los sistemas de calibración tienen una vasta uso en el sector de la fiabilidad y el supervisión de nivel. Permiten localizar eventuales errores, reduciendo reparaciones onerosas y averías a los sistemas. También, los información obtenidos de estos aparatos pueden emplearse para maximizar procesos y potenciar la presencia en sistemas de búsqueda.

Las áreas de implementación de los aparatos de balanceo cubren diversas sectores, desde la fabricación de ciclos hasta el monitoreo ambiental. No importa si se trata de importantes fabricaciones industriales o reducidos espacios de uso personal, los sistemas de equilibrado son indispensables para proteger un operación eficiente y libre de detenciones.

https://shvejnye.ru/

o5x0rv

Профессиональный сервисный центр по ремонту бытовой техники с выездом на дом.

Мы предлагаем:ремонт крупногабаритной техники в москве

Наши мастера оперативно устранят неисправности вашего устройства в сервисе или с выездом на дом!

Muchas gracias. ?Como puedo iniciar sesion?

# Harvard University: A Legacy of Excellence and Innovation

## A Brief History of Harvard University

Founded in 1636, **Harvard University** is the oldest and one of

the most prestigious higher education institutions in the United States.

Located in Cambridge, Massachusetts, Harvard has built a global reputation for academic excellence, groundbreaking research,

and influential alumni. From its humble beginnings as a small college established to educate clergy, it has evolved into a world-leading

university that shapes the future across various disciplines.

## Harvard’s Impact on Education and Research

Harvard is synonymous with **innovation and intellectual leadership**.

The university boasts:

– **12 degree-granting schools**, including the renowned **Harvard Business School**, **Harvard Law School**, and **Harvard Medical School**.

– **A faculty of world-class scholars**, many of whom are Nobel laureates, Pulitzer Prize

winners, and pioneers in their fields.

– **Cutting-edge research**, with Harvard leading

initiatives in artificial intelligence, public health, climate change, and more.

Harvard’s contribution to research is immense, with billions

of dollars allocated to scientific discoveries and technological advancements each year.

## Notable Alumni: The Leaders of Today and Tomorrow

Harvard has produced some of the **most influential figures**

in history, spanning politics, business, entertainment, and

science. Among them are:

– **Barack Obama & John F. Kennedy** – Former U.S.

Presidents

– **Mark Zuckerberg & Bill Gates** – Tech visionaries (though

Gates did not graduate)

– **Natalie Portman & Matt Damon** – Hollywood icons

– **Malala Yousafzai** – Nobel Prize-winning activist

The university continues to cultivate future leaders

who shape industries and drive global progress.

## Harvard’s Stunning Campus and Iconic Library

Harvard’s campus is a blend of **historical charm and modern innovation**.

With over **200 buildings**, it features:

– The **Harvard Yard**, home to the iconic **John Harvard Statue** (and the famous “three

lies” legend).

– The **Widener Library**, one of the largest university libraries

in the world, housing **over 20 million volumes**.

– State-of-the-art research centers, museums, and performing arts venues.

## Harvard Traditions and Student Life

Harvard offers a **rich student experience**, blending academics with

vibrant traditions, including:

– **Housing system:** Students live in one of 12 residential houses, fostering a strong sense of community.

– **Annual Primal Scream:** A unique tradition where students de-stress by

running through Harvard Yard before finals!

– **The Harvard-Yale Game:** A historic football rivalry that

unites alumni and students.

With over **450 student organizations**, Harvard students engage in a diverse range of extracurricular activities, from entrepreneurship to performing arts.

## Harvard’s Global Influence

Beyond academics, Harvard drives change in **global policy,

economics, and technology**. The university’s research

impacts healthcare, sustainability, and artificial intelligence, with

partnerships across industries worldwide. **Harvard’s endowment**, the largest of any university, allows it to fund scholarships, research, and public initiatives, ensuring

a legacy of impact for generations.

## Conclusion

Harvard University is more than just a school—it’s a **symbol of excellence, innovation, and

leadership**. Its **centuries-old traditions, groundbreaking discoveries, and transformative education** make it one of the most influential

institutions in the world. Whether through its distinguished alumni, pioneering

research, or vibrant student life, Harvard continues to shape the

future in profound ways.

Would you like to join the ranks of Harvard’s legendary scholars?

The journey starts with a dream—and an application!

https://www.harvard.edu/

https://vc.ru/

0bli34

Apcalis vs Kamagra

Oral Jelly is super convenient—no water needed!

The buzz around Kamagra vs Viagra Australia is real, but I’d check the source first.

yvva84

Кто ставил колпаки на металлические столбы?

Поделитесь!

https://playmobilinfo.com/index.php/User:LarryZahn41

f93kuv

Стафф Ойл – быстрая реализация сложных проектов.

надувной ангар для ремонта техники

d48m0t

c04pse

bc3ovy

mx115t

mx115t

bj07af

3agf3g

un5jcr

87rnle

The live dealer blackjack is super immersive, feels like

a real casino.

http://classicalmusicmp3freedownload.com/ja/index.php?title=Cazinoguru.org_49t

The BBW sex doll I ordered is super customizable, love it.

Душевые из стекла на заказ – это про комфорт и эстетику.

https://wiki.snooze-hotelsoftware.de/index.php?title=Benutzer:HUXLettie006824

Проверял разные ссылки, но только

кракен сайт даркнет сработал.

Доступ через TOR идеальный.

Пользуюсь ежедневно. |

кракен ссылка даркнет помог

мне зайти, когда все остальные сайты были заблокированы.

Очень удобно и быстро. Всем советую

попробовать. |

Мой друг посоветовал кракен ссылка официальная, и я не

пожалел. Всё стабильно и безопасно.

Доступ теперь есть всегда.

|

Когда сайт перестал открываться, я нашел кракен ссылка онион.

Это зеркало реально выручает.

Работает даже без VPN. |

Мой друг посоветовал кракен сайт как зайти,

и я не пожалел. Всё стабильно и безопасно.

Доступ теперь есть всегда. |

Сначала не верил, что кракен ссылка тор на сайт работает.

Но попробовал — и зашел без проблем.

Теперь это мой основной способ доступа.

|

Я давно искал рабочую ссылку и нашел кракен даркнет ссылка.

Все загрузилось моментально.

Теперь всегда пользуюсь только этим зеркалом.

|

Благодаря сайт кракен зеркало я смог быстро оформить заказ.

Сайт открывается через TOR без задержек.

Очень рад, что нашел это

зеркало. |

Я давно искал рабочую ссылку

и нашел kraken ссылка тор. Все загрузилось моментально.

Теперь всегда пользуюсь только

этим зеркалом. |

Не знал, как попасть на сайт, пока не нашел ссылка кракен онион.

Это зеркало открылось с первого раза.

Теперь сохраняю его всегда. |

Я давно искал рабочую ссылку и нашел кракен даркнет ссылка.

Все загрузилось моментально.

Теперь всегда пользуюсь только этим зеркалом.

|

Я давно искал рабочую ссылку и нашел ссылка кракен наркотики.

Все загрузилось моментально.

Теперь всегда пользуюсь только этим зеркалом.

|

В очередной раз заблокировали площадку, но кракен сайт магазин снова помог.

Сразу открылось, как обычно. Настоящее спасение!

|

Мой друг посоветовал кракен зеркало 2025, и я

не пожалел. Всё стабильно и безопасно.

Доступ теперь есть всегда.

|

кракен зеркало ссылка помог мне зайти, когда все остальные сайты были заблокированы.

Очень удобно и быстро. Всем советую попробовать.

|

Я давно искал рабочую ссылку и нашел кракен ссылка актуальная.

Все загрузилось моментально.

Теперь всегда пользуюсь только этим зеркалом.

|

Каждый раз, когда возникает проблема со входом, я использую

кракен ссылка onion. Работает стабильно и быстро.

Надёжный способ входа. |

Благодаря кракен ссылка официальная я смог быстро оформить заказ.

Сайт открывается через TOR без задержек.

Очень рад, что нашел это зеркало.

|

кракен ссылка впн помог мне зайти, когда все остальные сайты были заблокированы.

Очень удобно и быстро. Всем советую попробовать.

|

Каждый раз, когда возникает проблема со входом, я использую сайт кракен

онион ссылка. Работает стабильно и

быстро. Надёжный способ входа.

|

Не знал, как попасть на сайт, пока не нашел kraken ссылка onion.

Это зеркало открылось с первого

раза. Теперь сохраняю его всегда.

|

Не знал, как попасть на сайт, пока

не нашел ссылка кракен зеркало.

Это зеркало открылось с первого раза.

Теперь сохраняю его всегда.

|

Я давно искал рабочую ссылку и нашел кракен зеркало вход.

Все загрузилось моментально.

Теперь всегда пользуюсь только этим зеркалом.

|

Не знал, как попасть на сайт, пока не нашел

ссылка кракен. Это зеркало открылось с первого раза.

Теперь сохраняю его всегда. |

В очередной раз заблокировали площадку, но кракен ссылка onion снова помог.

Сразу открылось, как обычно. Настоящее спасение!

|

kraken ссылка тор помог мне зайти, когда

все остальные сайты были заблокированы.

Очень удобно и быстро. Всем советую попробовать.

|

Каждый раз, когда возникает проблема со входом, я использую

ссылка кракен даркнет маркет.

Работает стабильно и быстро.

Надёжный способ входа. |

Я давно искал рабочую ссылку и нашел кракен ссылка onion. Все загрузилось моментально.

Теперь всегда пользуюсь только этим зеркалом.

|

Мой друг посоветовал кракен ссылка, и я не пожалел.

Всё стабильно и безопасно. Доступ теперь

есть всегда. |

Мой друг посоветовал сайт кракен это, и

я не пожалел. Всё стабильно и безопасно.

Доступ теперь есть всегда. |

Благодаря kraken зеркало onion я смог быстро оформить заказ.

Сайт открывается через TOR без задержек.

Очень рад, что нашел это зеркало.

|

Мой друг посоветовал сайт кракен не работает, и я не пожалел.

Всё стабильно и безопасно. Доступ теперь есть всегда.

|

Каждый раз, когда возникает проблема со входом, я использую

кракен маркетплейс зеркало.

Работает стабильно и быстро.

Надёжный способ входа. |

Мой друг посоветовал кракен ссылка актуальная,

и я не пожалел. Всё стабильно и безопасно.

Доступ теперь есть всегда.

|